On the dangers of stochastic parrots: Can language models be too big.

Bender, Emily M., et al. “On the dangers of stochastic parrots: Can language models be too big.” Proceedings of the 2020 Conference on Fairness, Accountability, and Transparency; Association for Computing Machinery: New York, NY, USA. 2021.

In December 2020, Dr Timnit Gebru, previously co-lead of the Ethical AI team at Google, departed the company at the culmination of a conflict surrounding this paper. Lacking firsthand knowledge about the events, I won’t comment on that except to note that the subsequent media blow-up probably ended up giving this paper more visibility than it otherwise would have had.

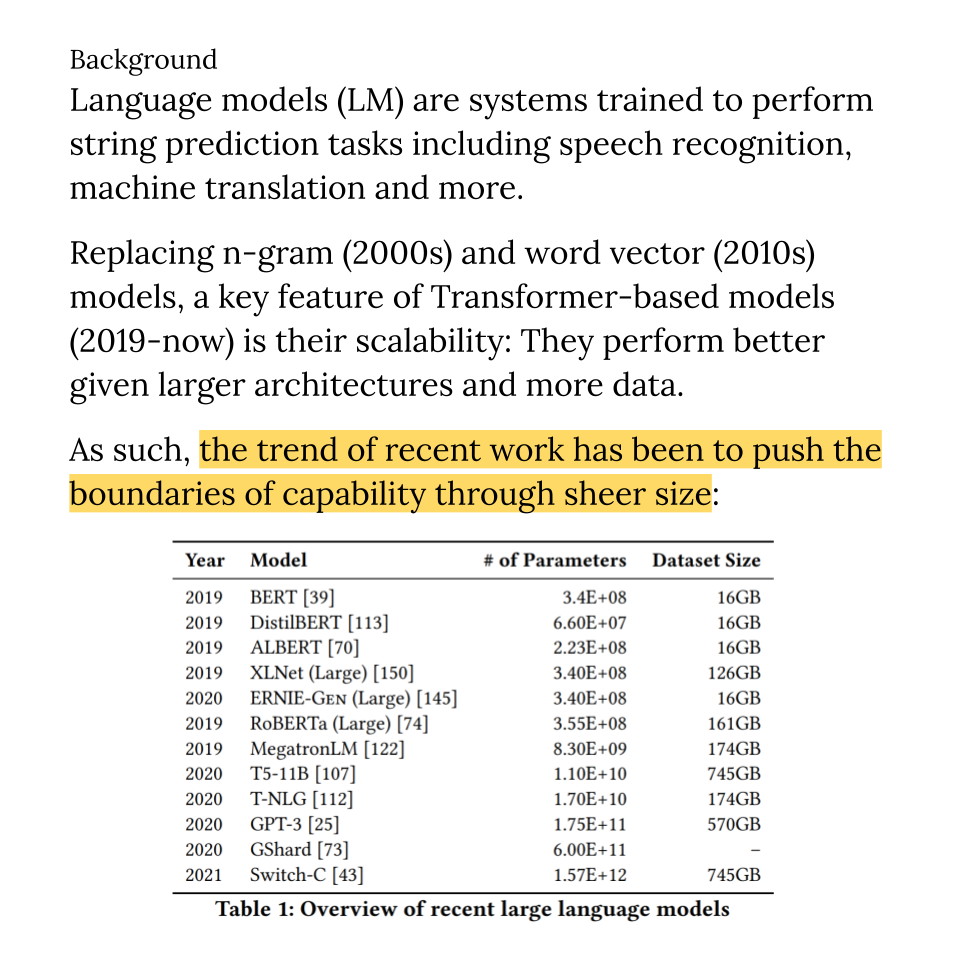

The paper itself addresses some really important questions which are central to the kind of research being done recently for fairness and accountability of AI systems. Its criticisms are targeted squarely at the large language models which have been all the rage lately (in both a good and bad sense): GPT-3, Switch-C and friends.

In my opinion, the authors highlight some very well-founded criticisms and risks, which I think many researchers nowadays are aware of but many are afraid to engage with head-on. Unfortunately, I think there is no longer any excuse for scientists to say “Leave the politics out of this, I just want to focus on the science!” We cannot simultaneously claim that AI will be the most transformative power of the century, and pretend that we can hide from the responsibility of that power.

That said, regarding the intimidating pile of challenges that lie ahead, I have hope that the community will be able to untangle the mess and solve the technical problems with technical solutions, while inviting more voices to the table to address the wider societal concerns. The AI community should absolutely not hold all the power and burden alone.

View in Google Slides.

Join the conversation on: